Proposal

- Jun 14, 2012

- 2 Comments

Problem

The problem I would like to contribute to is the experience of the domain expert in the context of submitting test code so that they may better be able to help other Looking Glass users. Aspects of this problem include the test code interface, the problem of validation of possible test code and the implementation of validated test code.

Importance

The importance of improving the creation and submission of test code lies in the belief that domain experts, including experienced programmers and those well versed in the Looking Glass environment, have something to offer less experienced programmers and novice Looking Glass users. The test code interface takes advantage of the fact that domain experts are better able to recognize good coding standards as well as possible improvements that novice Looking Glass users can make in their worlds and, to a broader extent, to their programming habits.

With test code in place, less experienced programmers and Looking Glass users will better be able to learn to program in the Looking Glass environment and will be rewarded at various stages for improving their programming abilities based on these tests. This will presumably improve the overall experience of the new Looking Glass user by making the learning curve a bit more manageable.

The test code procedure will build a stronger sense of community among domain experts since (as it will be described in detail later in this post) prospective test code will have to be approved by multiple domain experts. This greater sense of community among domain experts will hopefully lead to an increase in the quality and quantity of submitted test code.

In the end, the test code procedure will better help invigorate members of the Looking Glass community, allowing domain experts, with an accumulation of knowledge and experience, to provide feedback to less experienced Looking Glass users.

I use the term “test code procedure” to describe a timeline that includes the creation of the test code, the submission of the code, validation from the Looking Glass community and implementation of the test code. This timeline will be described in detail later in this post.

Plan

The plan for improving the test code interface involves three integral parts: the interface for writing and submitting test code, the process of validation for submitted code and the implementation of validated code. Included in the interface is the API that domain experts will have access to in writing test code.

The next sections of the post will detail the three parts of the improvement of the test code procedure as well as a walkthrough of the test code procedure from the perspective of an end user (in this case a domain expert).

Interface

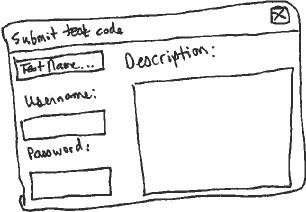

Currently, there is a basic interface for test code in place. It is, at this point, a mockup of the end product. One of my goals this summer will be to improve the interface in order to make a domain experts experience in authoring and submitting test code a smooth one.

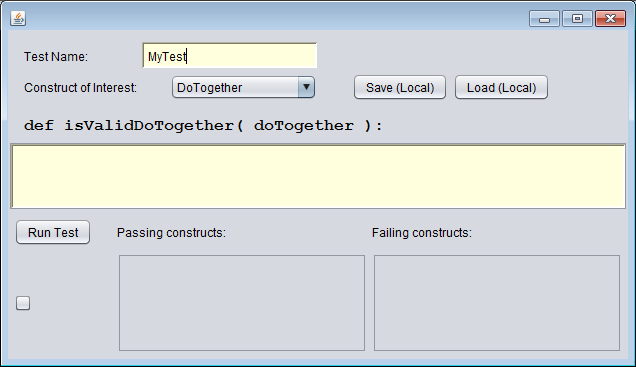

Below is an image of the current state of the test code user interface.

At this point, the interface is not very helpful during the authoring process. I plan to include a dialogue box that will output the current command-line output of the running of the domain expert-submitted scripts. This way, the domain experts will, during the process of authoring these scripts, be able to see the output and outcome of these scripts, and will, if needed, be able to debug their scripts with the aid of this dialogue box. Since the scripts will, for the most part, be simple ones, I imagine domain experts will rarely need to use the dialogue box as a tool for debugging, but I feel that providing the dialogue box will improve the user experience.

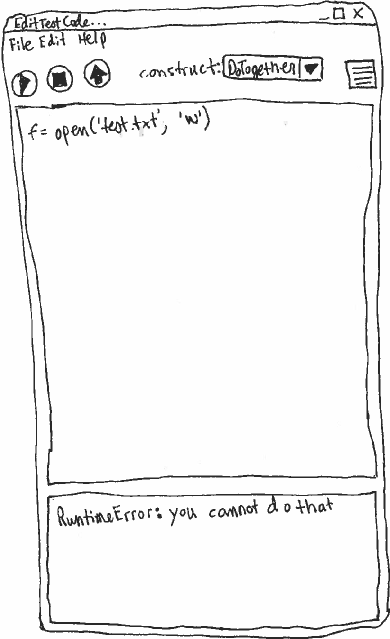

The dialogue box will be an integral part of the test code user interface, and the updated interface will be designed around the script text area and the dialogue box. Below is a rough sketch of what I imagine the updated user interface will look like.

The interface is partially inspired by the Arduino and Processing IDEs. I wanted the interface to be simple, elegant and intuitive. The centerpiece of the interface is, obviously, the text field for the test code and, below that, the dialogue box that will offer feedback when the user chooses to run her script.

Above the text box are several buttons. The play and stop buttons are for running the script and stopping a currently running script. The up arrow next to these buttons is for submitting a script for validation. The process of validation, including the submitting of test code, will be described in greater detail in the next section.

Next to the up arrow button, the user will be able to choose which construct their script would like to test for. The button next to this drop down menu will be a link to test code API documentation. I imagine that the API documentation will be hosted online so that as the test code procedure evolves, the API (including its documentation) may more easily be changed.

Since the running of the test scripts will be done on a server, and not on the user’s computer, the control of the API will be in the hands of the Looking Glass team, and, as mentioned previously, the API will therefore be more flexible to change as the test code procedure evolves and more users submit test code.

At this point the API is basic and offers little in the way of help when it comes to making the user’s job of authoring test code. Ultimately, the API should serve as an intuitive tool for the domain expert to write test code of high quality. The API will include methods for easy access to various parts of the world that the script is testing.

At this point, it would be infeasible to come up with a definite plan for the specific methods and classes that will be included in the improved API. The best time to do this is after the Looking Glass team performs the study involving experienced programmers. This study will offer insight into how an experienced programmer might interpret the Looking Glass environment and the worlds that can be created in the environment. The information that the Looking Glass team will take away from this study will guide the direction of the API, since the programmers that will take part in this study are, in many ways, the type of programmers that will be authoring test code.

Validation

The process of validation for test code will begin with a user’s submission of the code to the community. Validation of test code means testing the code to make sure it is generalizable to a variety of contexts. The most feasible way to do this is to allocate the job of testing the code on various worlds to members of the community, and more specifically other domain experts. These other domain experts will be able to run these scripts on worlds to make sure that these scripts work for a wide range of worlds.

When a user submits test code, the code will be uploaded to the community, where it will be presented on a page specially designed to house submitted test code. On this page, a domain expert will be able to select submitted test code and test this code on a variety of worlds in order to make sure that the code is well written in the sense that it is generalizable and solves a problem that a novice programmer might encounter. A domain expert who has run the test code on several worlds may approve the test code for implementation or offer constructive criticism for improvement of the code. Ideally, submitted code should be validated by multiple domain experts in order to be approved for implementation.

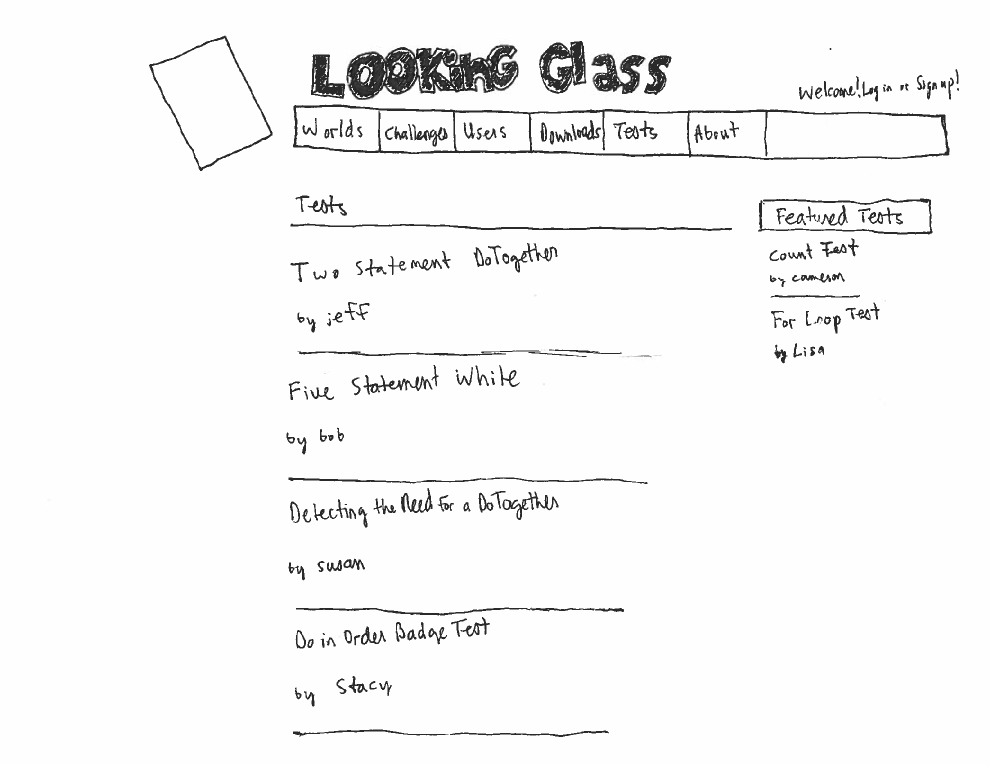

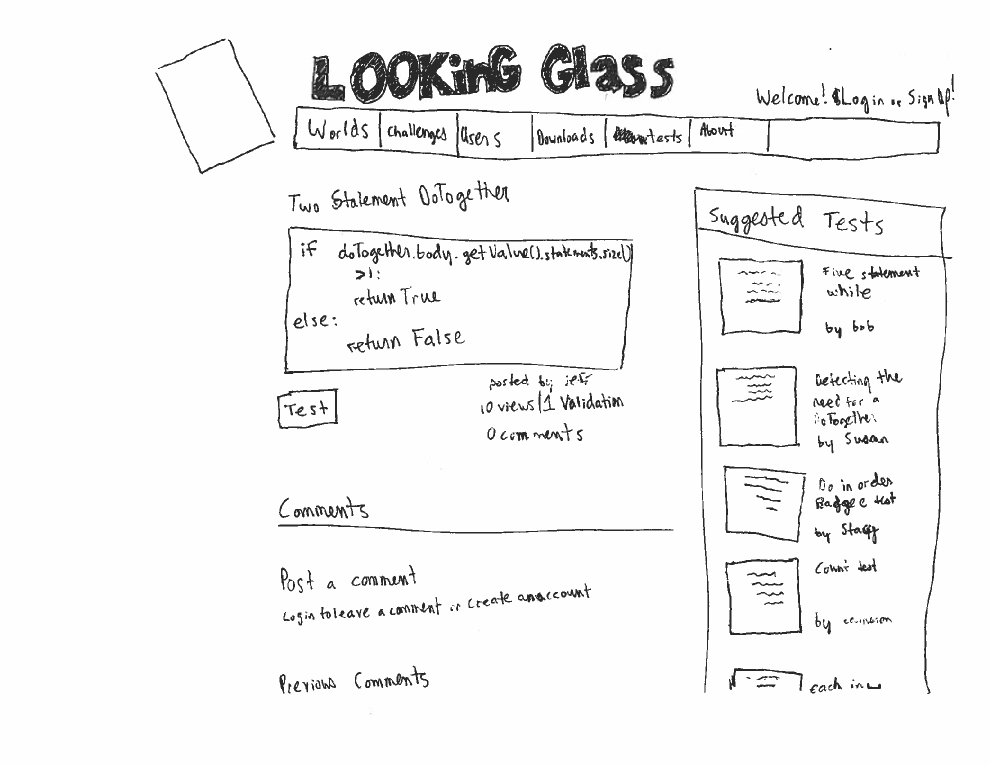

Below are several sketches of what the community page for submitted test code might look like.

In order so that community members may easily be able to find the user-submitted test code waiting for validation, there should be a prominent tab or button somewhere on the website to direct users to submitted test code. In the sketches, I have included this tab at the top of the page, in the same bar as the worlds and challenges tags, but it is not essential that the tab lies here in the final product.

The test code homepage will include a paginated list of test code that has heretofore not been validated. Each test will be dedicated its own page, where the test’s code will be displayed.

If a visitor wishes to test the code, she merely presses the test button under the script’s text box. Doing so will lead the visitor to a page where she will be able to run the test on various user-submitted worlds and see the result of the run. Based on the result of the run, the visitor may then click the validate button under the video of the testing world. Doing so will mean that the test is validated for that particular world. We would obviously like the test to be validated for a variety of worlds, so this process will likely require multiple visitors to the world test page to become fully validated (as in, validated for a particular number of worlds, as determined by the Looking Glass team). If the test fails or does not do what it intends to do, then the visitor to the test world page may leave a comment for the test author describing what went wrong and possible solutions. For this reason, editing (on the part of the original author) of submitted scripts should be supported on the community site.

Once the test has received enough validations, it will be removed from the list of tests to be validated, and will be implemented.

Implementation

Of the various parts of this proposal, the implementation is, to be honest, the least formed part, mainly due to lack of information and knowledge. I imagine the information and knowledge needed to come up with and implement a plan for the implementation of accepted test code will come as the summer and this project progress.

I would, however, like to provide a basic outline of a plan for the implementation phase of the test code procedure. Validated test code should, at some point in the process of world creation, be tested on a world created by a novice or inexperienced Looking Glass user. It would be a bit awkward for this testing to happen when the user tries to share the world with the community, and it would be a bit intrusive to randomly run the test while the world is being made.

With the above thoughts in mind, it seems most reasonable that the user should have some control over when the tests are run on their world. Obviously, a user wanting the sort of help that a test could provide will be a novice Looking Glass user, and therefore may not even be aware of the testing functionality. So, ideally, the user should be pointed to the tests early in their introduction to the Looking Glass IDE. This introduction could come in the form of a short tutorial or a step in a preexisting tutorial’s process.

I imagine the user being able to run verified test code on their world by pressing a button located either in the World drop down menu at the top left-hand corner of the IDE or a button located in the top right-hand corner of the IDE (next to the Challenge Info, Remix and Share buttons).

Walkthrough

The process will begin when the domain expert authors a script.

Presumably, the domain expert, before submitting the script, will have tested the script on a self-authored world in order to test and, if needed, debug the script.

After the domain expert tests and debugs the script, she submits the test to the community by proessing the up arrow button, and the screen below appears.

Once the domain expert submits the test, the test will appear on the test page of the community site.

There, other domain experts can run the script on several worlds to ensure that the test works correctly in a variety of contexts.

Once all of these worlds have been validated for the test, the test will be implemented, and novice users will be able to run this test on their world in order so that they may be rewarded for inclusion of various programming concepts or receive advice on how better to program their world.

Discussion

I realize that this is a very ambitious proposal. Over the past several weeks, I have come to understand and appreciate this part of the Looking Glass IDE, and for this reason, I feel I should work very hard to implement my plans as best as I can. This proposal is, I hope, a reflection of the dedication I am willing to put into this aspect of the IDE.

Comments

caitlin said:

<p>There are some cool ideas here!</p> <p>One thing I want to push back a little on is the assumption that where the tests get authored and where they get run need to be the same place. Ultimately the "correct" tests and even "candidate" tests will get run by the server, but the IDE is a much better place to develop and test them. I don't know if this is still working in your branch or not, but the passing and failing tests section was designed to help test authors to review their tests quickly to see whether the actual constructs in a given world that pass/fail that test are the ones they would expect. It seems like reviewing a larger collection would want to also happen in the IDE because then a test author can look at the code for each of the test worlds and decide whether or not the results are correct. If we do it on the web, then we have to worry about how to render the code in that space so that test authors can make informed decisions about whether or not their tests are working.</p> <p>On the super positive side, we've gotten some volunteers to participate in the expert programmers study, so you should get to see some of that first hand.</p> <p>There's some really nice progress here! Look forward to chatting about it when we get back.</p> <p> </p>

kyle said:

<p>This looks really great. A couple of things I'd like for your to keep in mind... For Processing and the like... there are lots of resources (i.e. text books and classroom materials) that make their minimalist interface work. Our domain experts will not likely have these resources so we may need more in our interface... but maybe we could think about writing the code tests in a Looking Glass perspective!? This may then give you the ability to use the current Looking Glass program to help author the test (since we are in a perspective not a dialog). The test author could click on a button (or similar) like in Dinah and click "Author test about this line of code" which we could then insert into the test editing pane a simple example test or something... it might be interesting to think about all the ways which we could author tests to make this process accessible to our domain experts. What are your thoughts?</p> <p>I also like your thoughts for how the domain expert may upload the test to the site. You may also want to think about this more... maybe give them to update a test from the site that they have already uploaded... or even run the current test on some worlds from the site on their computer (locally)!</p> <p>Really nice work.</p>

Log In or Sign Up to leave a comment.