Week 4

- Jun 21, 2012

- 1 Comment

This post details the updated plans and designs that my proposal introduced. Included in this post are the details of the mentor/mentee relationship that underlies the test code functionality as well as the updated interface design.

Mentor/Mentee Relationship

While the relationship will be a relatively flexible one, it is important that a relationship like this be documented. For the time being, only documented mentors will be able to author tests. In theory, a mentor will notice that his or her mentee may not be doing something correctly. The mentor will then be able to create a test, thus authoring a “rule,” in order to help other novice Looking Glass users who may have the same problem as his or her mentee.

As is the case when dealing with children, a certain level of safety (one that would often seem a bit much) is, in this case, justified. Only documented mentors will be able to author these rules since an undocumented user may author a malicious script. The process of documenting mentor/mentee relationships will ensure that mentors are trusted sources, and will, presumably, not author malicious tests.

A cross-validation procedure will be in place in order to verify that submitted tests are not malicious and are generalizable to a variety of worlds. This procedure will include the farming out of newly-submitted tests to other mentors for validation. These randomly chosen mentors will then be able to run the submitted test in various contexts in order to ensure that the test works properly.

Ideally, the test author will have the ability to re-upload or edit a test once it has been submitted for approval so that, if another mentor finds a fault with the test, the author need merely edit the script to account for that fault.

Once a test has been validated the predetermined number of times needed to become validated, the test is then added to the tests that are run on submitted worlds. When the test is run on a world and the test finds a place that may need improvement, the author of the world will be notified that a portion of their code could be improved. The user will then be asked if this advice was helpful. This user feedback will aid in the determination of the lifecycle of the test. If users repeatedly say that the test advice was not helpful, the test is likely not very useful to Looking Glass in general. At a certain point, useless tests should not be run on user worlds anymore.

It would also be in our interest to get rid of duplicate tests –tests that are doing the same thing for each world. If we have 15,000 tests that all look for a simple for loop, we should, in theory, get rid of 14,999 of them. The challenge comes in determining if a test is a duplicate. I think the best way to do so is to run a variety of tests on worlds, and see which outputs match each other. This test will have to be a rigorous one, since we obviously do not want to get rid of tests that are not duplicates.

Interface

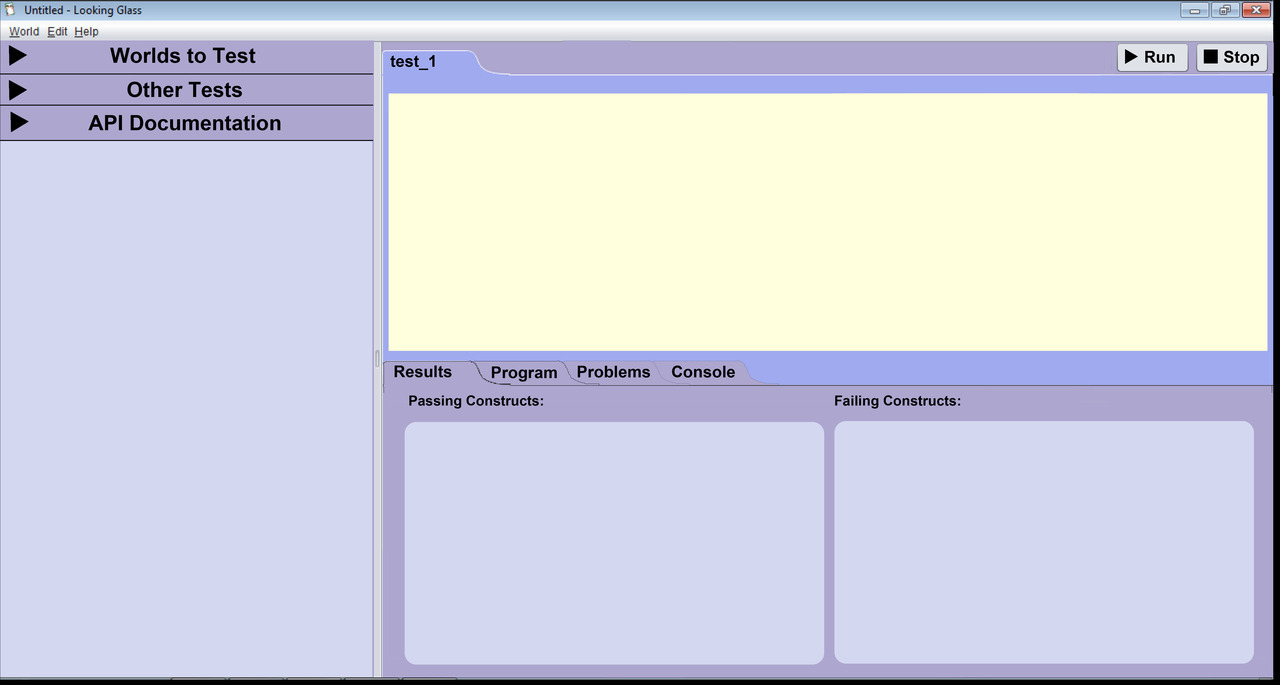

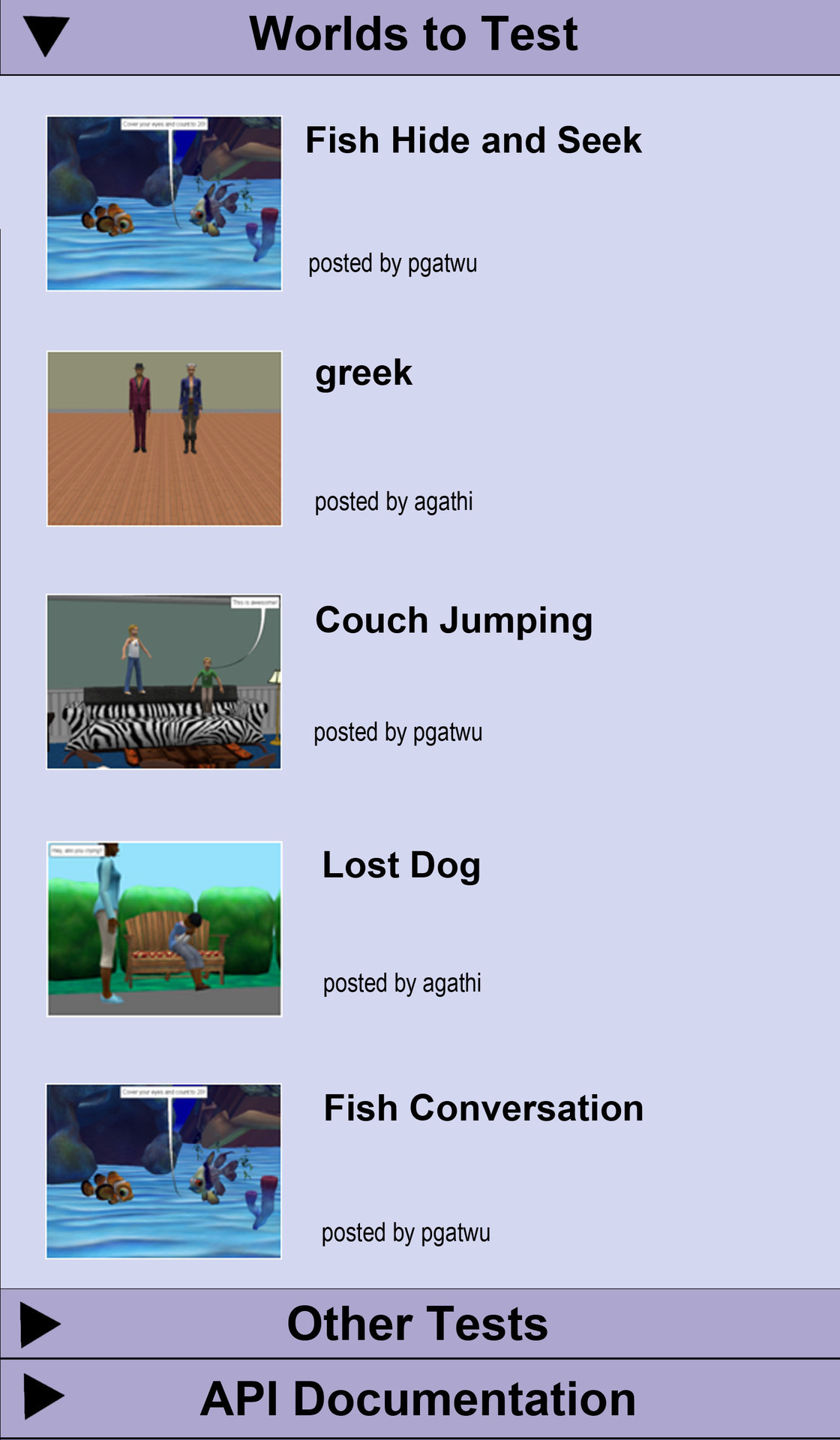

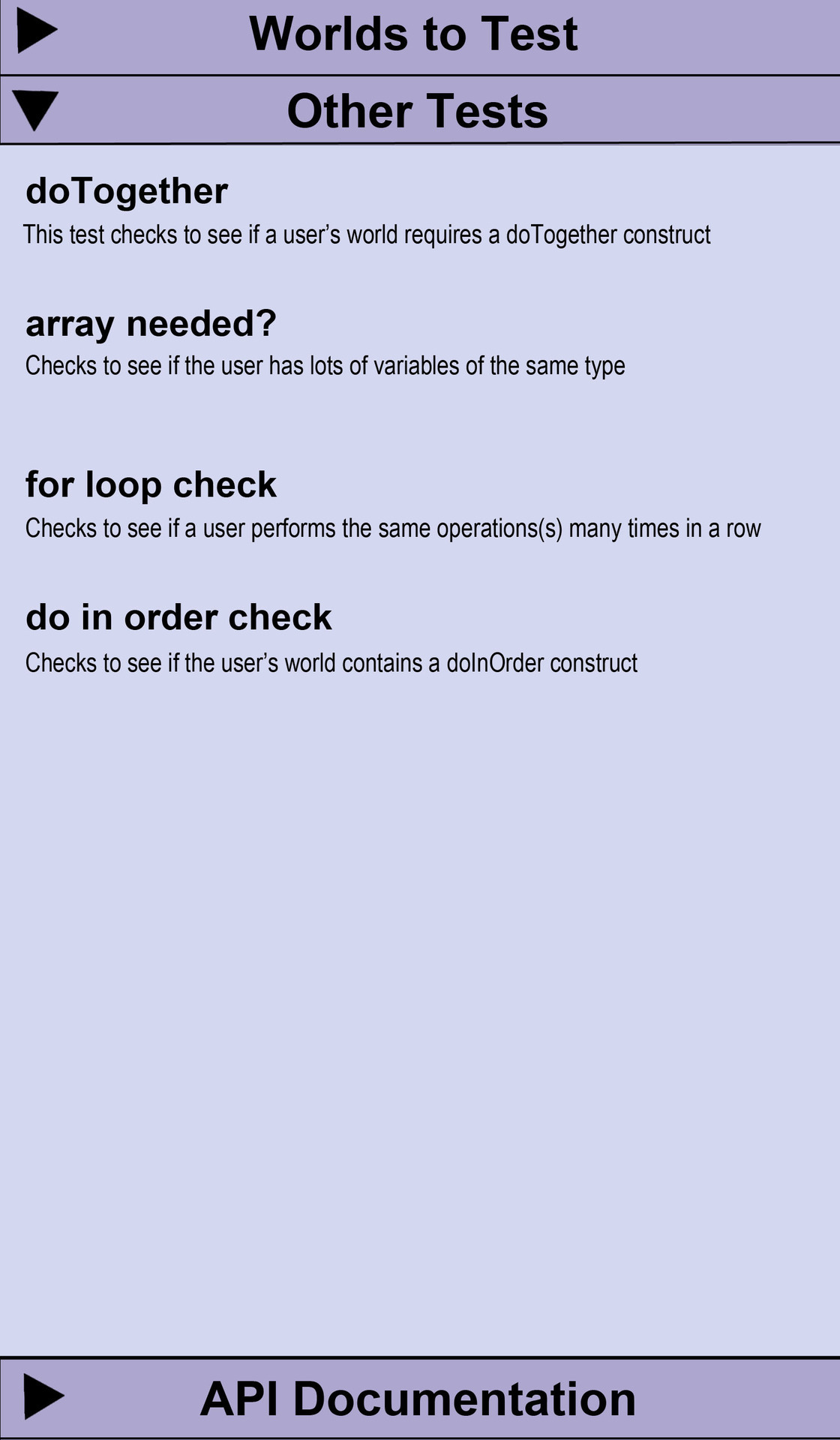

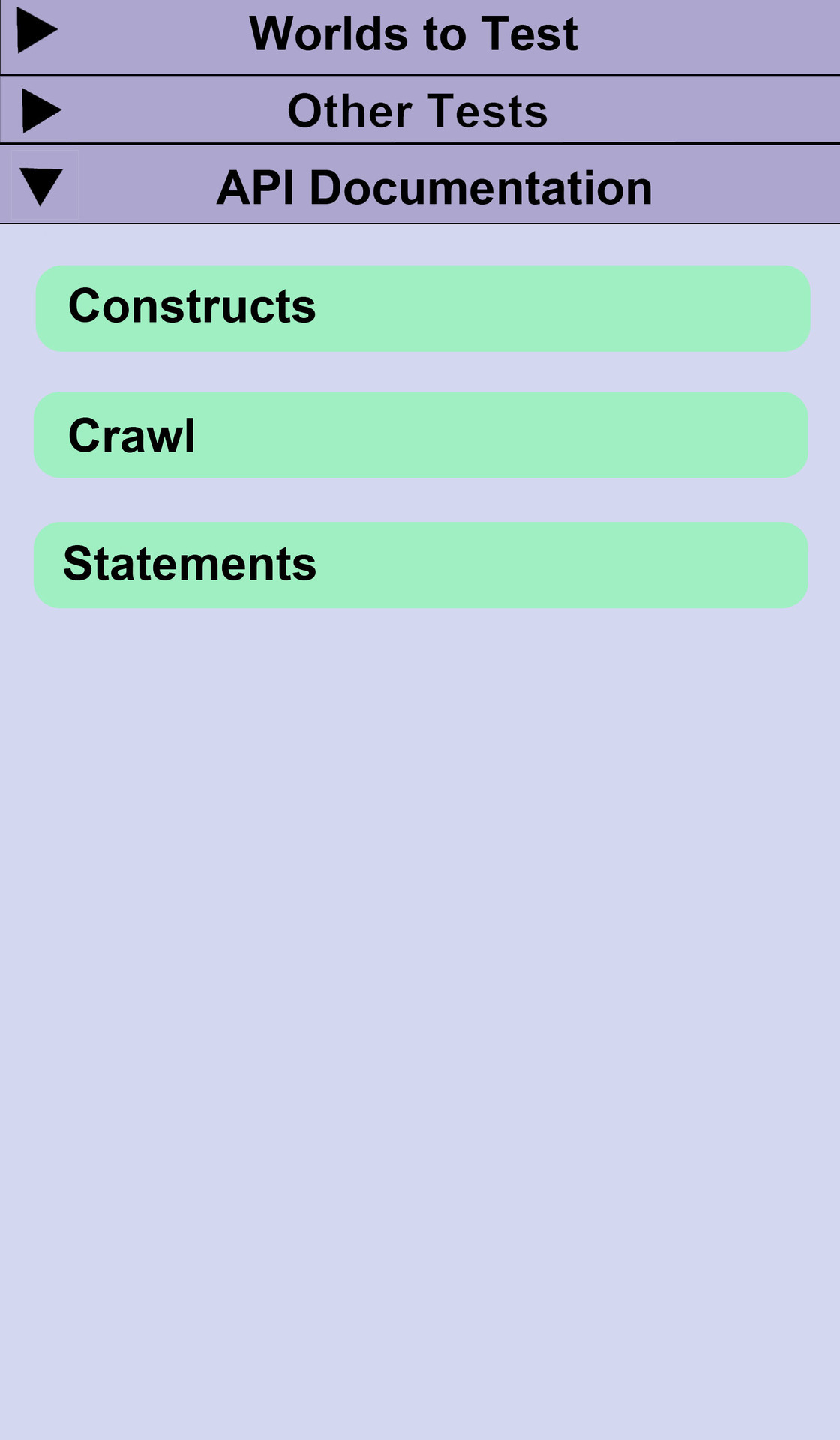

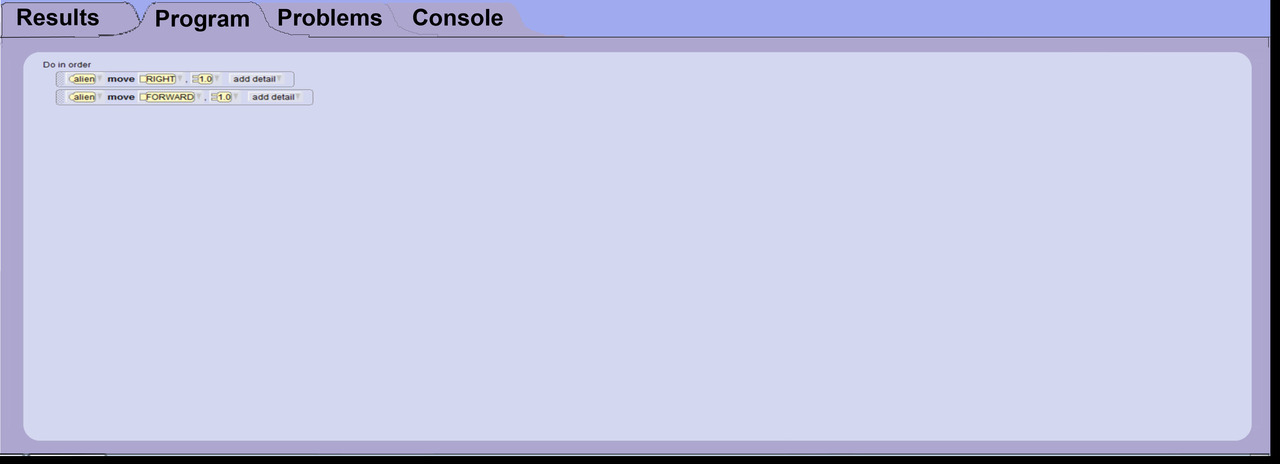

Below are several images of the interface for test code authoring.

Below is the left pane in various views.

Below is the bottom pane in Program view.

Comments

caitlin said:

<p>Comparing the output of scripts seems like a good way to identify potentially overlapping tests. Perhaps we can then send "suspected overlapping" tests out to a set of mentors and ask if they are actually doing the same thing or not.</p> <p>The mockups of the interface seem promising. The longest element is likely to still be kids' programs, so that might want to still get the primetime top right spot, particularly since there may be sub-tabs for different open methods. I like the view of "here are some programs you should look at" Do we want to auto-run the draft of the test on those and say how many constructs are passing and failing in the side bar with the title and author. One of the things in the original super-prelim interface was an interface that helped the test-author to quickly navigate to and look at all of the segments of code in a given world that both pass and fail the test.</p> <p>I would still like to consider whether we can present a series of stages in the interface and I'd be excited to see some sketches that explore how to do that.</p> <p>Nice job with this - it's a pretty big potential design space and you're laying out some very strong possibilities.</p>

Log In or Sign Up to leave a comment.